What is Learning Rate?

The definition of learning rate or step size is a hyperparameter that controls how much a model’s parameters or weights are changed when training a convolutional neural network (CNN). The learning rate has a significant influence on a model’s performance and how quickly it can learn.

Techopedia Explains the Learning Rate Meaning

The meaning of learning rate is the rate at which a model adjusts in response to errors. Machine learning (ML) researchers and developers typically pick a value between 0.0 and 1.0.

This value determines the extent to which a model adjusts its weights during the training process. Researchers are looking to select the optimal learning rate so the artificial intelligence (AI) model can learn quickly and reliably.

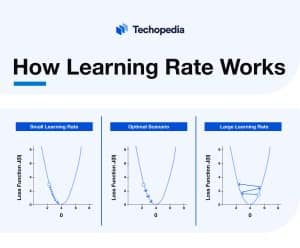

If the value is too small, the model won’t learn quickly or will get stuck. Likewise, if the value is too high, the model will adjust to rapidly making it unable to generate reliable predictions.

How Learning Rate Works

As outlined above, the learning rate determines how much a model adjusts its weights when training. To find the optimal learning rate, researchers and developers will typically experiment with a range of values to see which offers the best performance.

The optimal learning rate is whatever value offers a high rate of learning without becoming unstable. The end goal of selecting this value is to minimize the model’s loss function, i.e., the error between the predicted output and actual output of an ML model, to make the model more accurate.

Choosing the Right Learning Rate

There are a number of different approaches that AI researchers can take to identify a model’s optimal learning rate. For many researchers, this is done via trial and error by experimenting with different learning rates and seeing how the model performs.

For instance, a researcher could start with a small initial learning rate, train the model for multiple epochs (iterations of training data passing through the algorithm), and increase the learning rate by a given factor each iteration.

Throughout this process, you can monitor training loss, and once this loss starts to increase, you can choose a prior learning rate.

It’s important to highlight that there is no one-size-fits-all approach to training ML algorithms, and there are other techniques for finding the optimal value (see Methods for Calculating Learning Rate below).

Effect of Learning Rate

In practice, the chosen learning rate will determine the speed at which a model learns and how stable it performs. Once again, you want to pick a value that’s high enough that the model can learn quickly without changing its weights so extensively that its performance becomes unreliable.

Learning Rate and Overfitting

One of the biggest issues to consider when selecting an algorithm’s learning rate is overfitting. Overfitting is where a model is overcorrected in response to its training data and fails to generalize to new data.

Generally, lowering the learning rate will reduce the risk of overfitting, whereas increasing the learning rate can increase the risk of overfitting if the optimal value isn’t selected.

Learning Rate and Underfitting

Another issue to consider when selecting the learning rate is underfitting. Underfitting occurs when the chosen learning rate is too low, which causes the ML algorithm to converge too slowly and become too basic to learn a dataset’s underlying patterns.

In short, underfitting means a model can’t learn from its training data and offers poor performance to the end user.

Methods for Calculating Learning Rate

There are a number of different approaches you can use to calculate the optimal learning rate.

Some of the most common are listed below:

Impact of Learning Rate on Model Performance

Selecting the optimal learning rate is critical to AI optimization and improving the model’s performance. Once again, if the value is too low, the learning process will take longer, and the model will be unable to learn or learn so slowly that it isn’t useful to the user.

If the value is too high, the learning process will happen too fast, and the model’s predictions will be inaccurate.

For this reason, selecting the learning rate is essential for ensuring that the model has the accuracy, reliability, and stability needed to meet the end users’ needs when it is interacted with in an end user application. It’s also important to ensure that the model is computationally efficient.

Learning Rate Pros and Cons

Different learning rates come with their own sets of advantages and disadvantages. We’ve listed some of the pros and cons of having a low or high learning rate below.

Pros

- High learning rate can lead to faster convergence

- High learning rate can reduce the time taken to train a model

- High learning rate can reduce GPU costs

- Lower learning rate can increase stability during training

- Lower learning rate reduces the risk of overfitting

Cons

- High learning rate can lead to overfitting

- High learning rate can result in unstable predictions

- Lower learning rate can increase the time taken to train a model

- Lower learning rate increases GPU costs

- Lower learning rate can increase the risk of underfitting

The Bottom Line

The learning rate is arguably the most important hyperparameter to consider when training a neural network. Selecting the right value puts a model in the position to learn at a reasonable speed while reducing the risk of instability in performance.